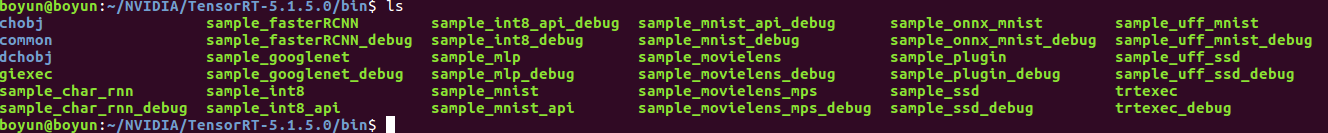

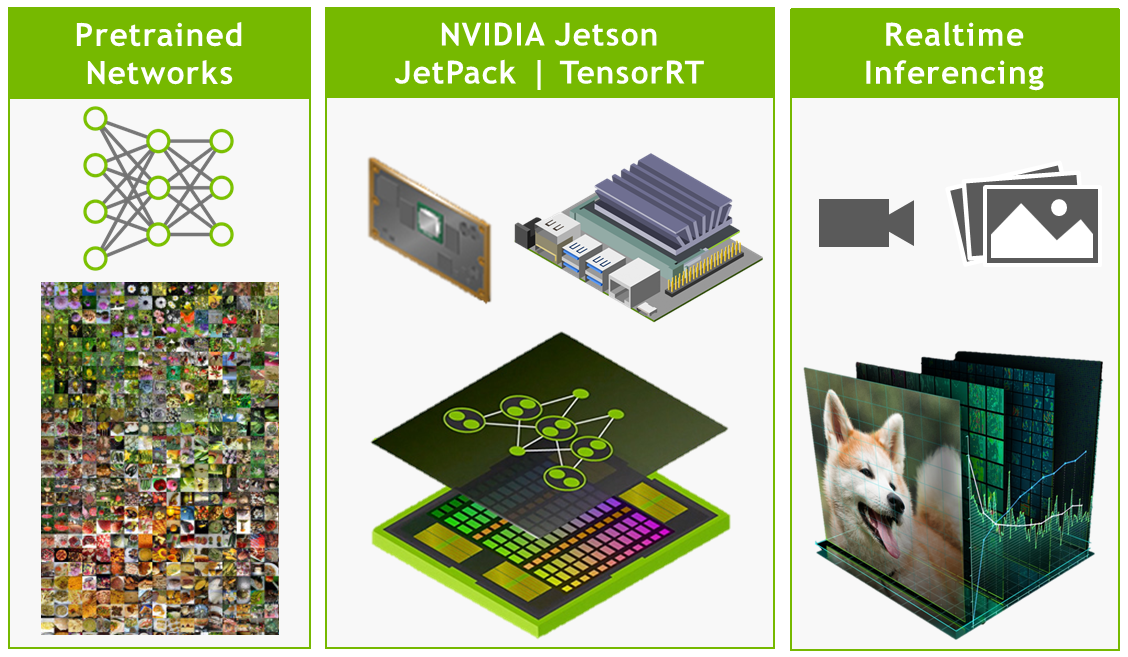

GitHub - chenzhi1992/TensorRT-SSD: Use TensorRT API to implement Caffe-SSD, SSD(channel pruning), Mobilenet-SSD

GitHub - brokenerk/TRT-SSD-MobileNetV2: Python sample for referencing pre-trained SSD MobileNet V2 (TF 1.x) model with TensorRT

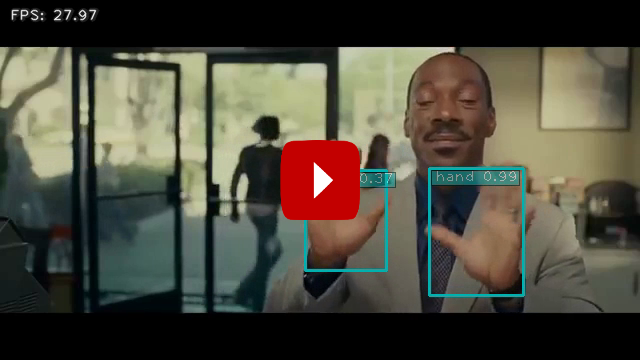

GitHub - tjuskyzhang/mobilenetv1-ssd-tensorrt: Got 100fps on TX2. Got 1000fps on GeForce GTX 1660 Ti. Implement mobilenetv1-ssd-tensorrt layer by layer using TensorRT API. If the project is useful to you, please Star it.

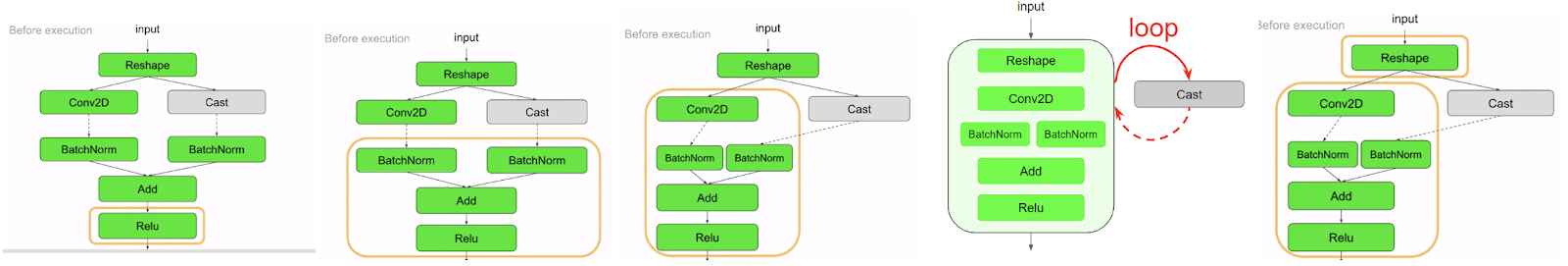

Run Tensorflow 2 Object Detection models with TensorRT on Jetson Xavier using TF C API | by Alexander Pivovarov | Medium