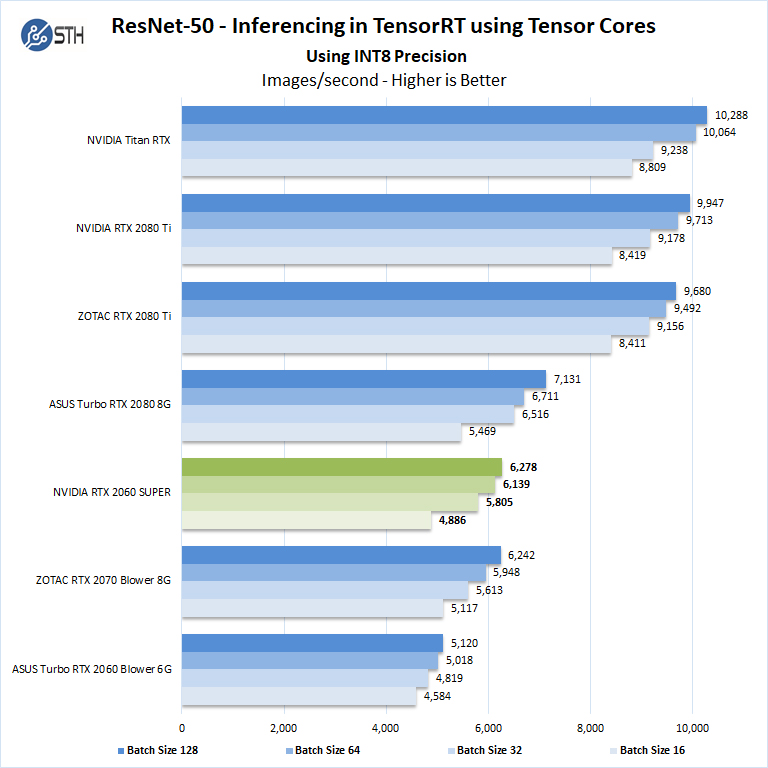

RTX Titan TensorFlow performance with 1-2 GPUs (Comparison with GTX 1080Ti, RTX 2070, 2080, 2080Ti, and Titan V)

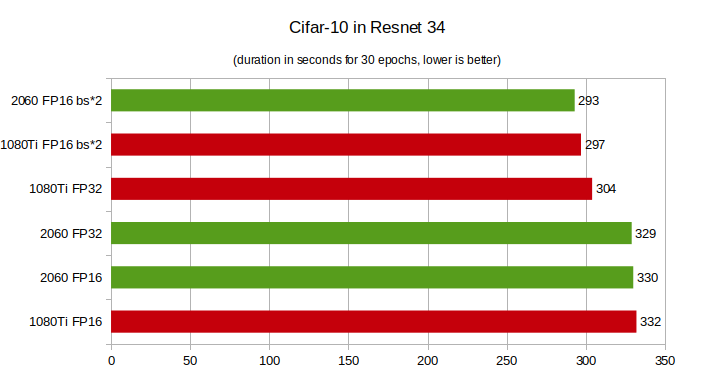

RTX 2060 Vs GTX 1080Ti Deep Learning Benchmarks: Cheapest RTX card Vs Most Expensive GTX card | by Eric Perbos-Brinck | Towards Data Science

![D] Which GPU(s) to get for Deep Learning (Updated for RTX 3000 Series) : r/MachineLearning D] Which GPU(s) to get for Deep Learning (Updated for RTX 3000 Series) : r/MachineLearning](https://external-preview.redd.it/5u8jDdRCaP-A20wEDShn0DFiQIgr2DG_TGcnakMs6i4.jpg?auto=webp&s=25a683283f0d0a367ff1476983de4b134c72d57f)