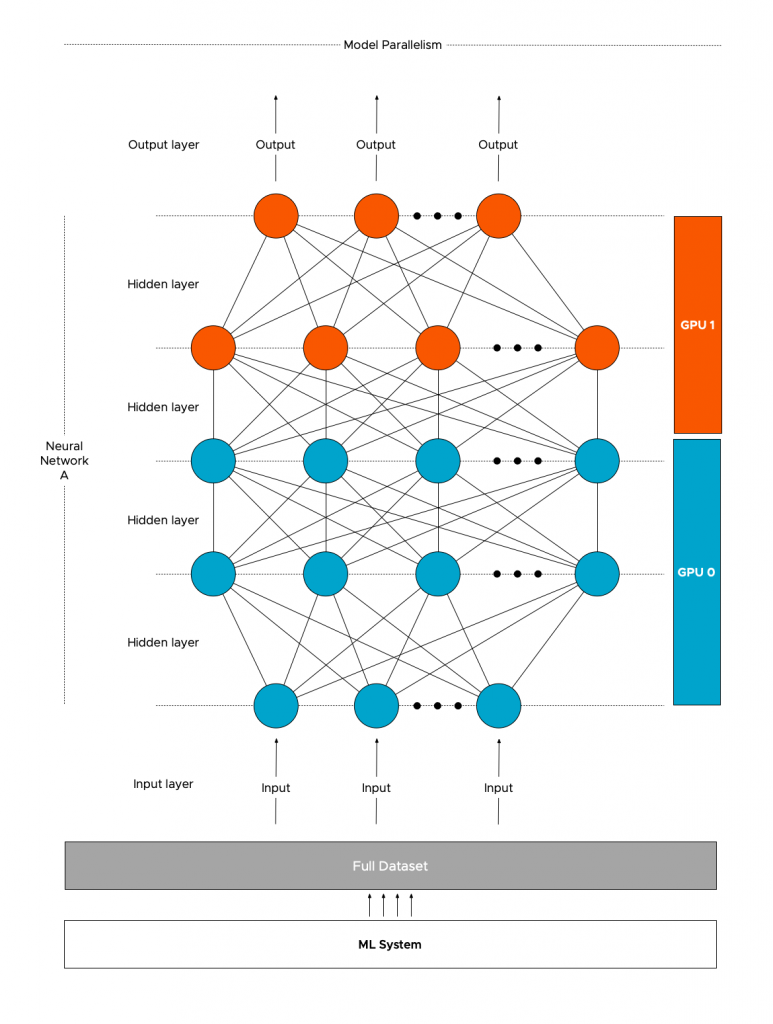

Learn PyTorch Multi-GPU properly. I'm Matthew, a carrot market machine… | by The Black Knight | Medium

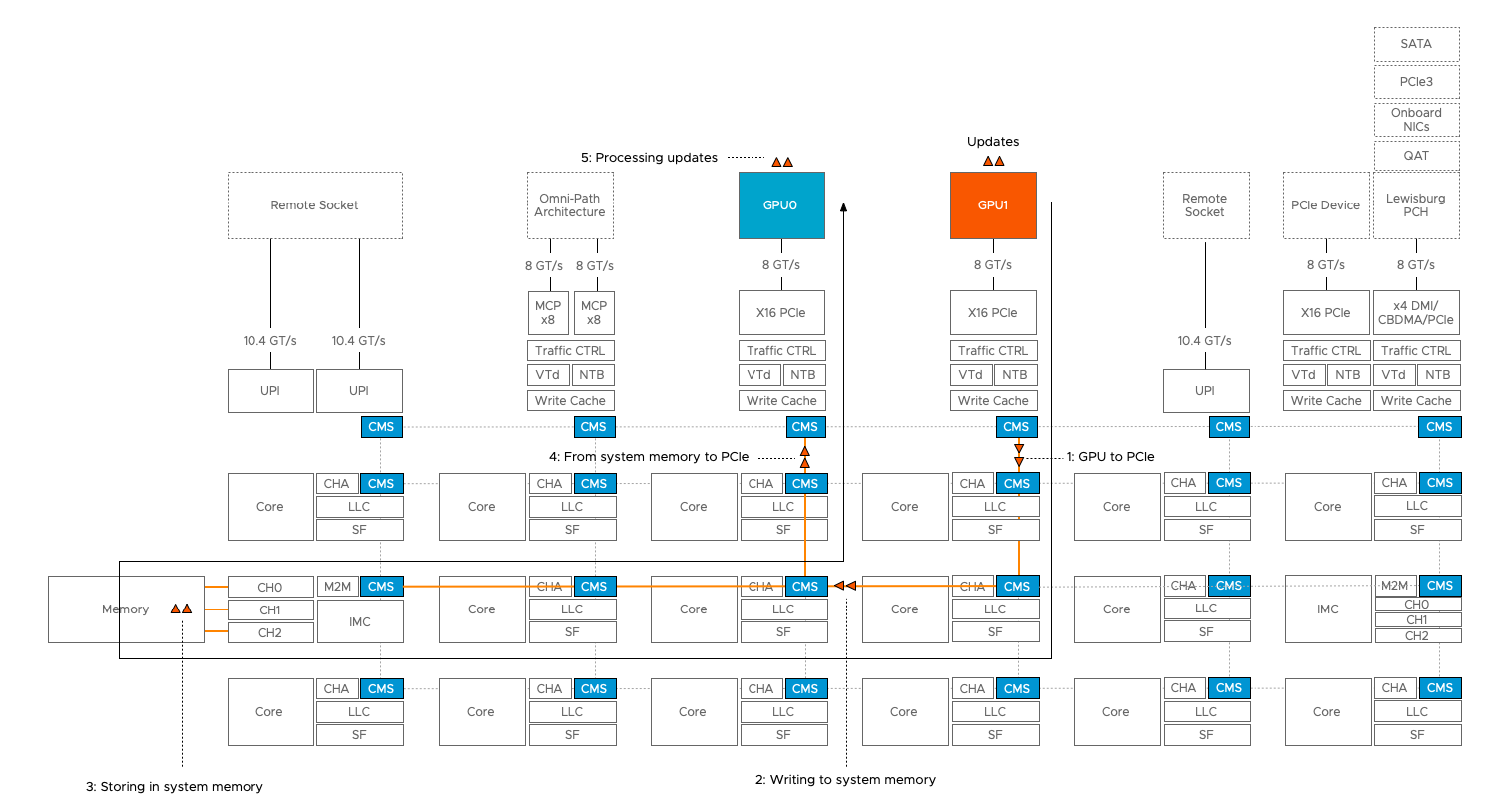

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science